Installed at The Minories Galleries, Colchester.

All posts by jg

Introducing ‘play table’

I am pleased to introduce the ‘play table’ project.

“An innovative art technology project that will develop an experiential artwork with which four or more participants may interact simultaneously. A video image projected from above onto a large tabletop surface will be calibrated to allow multiple-participant touch interaction. Participants will be invited to manipulate virtual objects on the surface using bodily interaction resulting in an audiovisual experience that is a direct product of social playfulness.”

It’s an iterative project with key stages taking place in public. This video documents the first stage.

Supported using public funding by the National Lottery through Arts Council England.

More about NetPark

Commission for Metal Culture NetPark

Finally I’ve found a moment to announce the good news that I have been awarded a commission by Metal Culture to develop an augmented reality art work for the NetPark project which you can read more about here. I’ve been busy developing technical and artistic approaches to this exciting project. My basic idea is to create an experience that brings the trees of the park to life as they tell stories of events and happenings that have occured in the park’s 100+ year history. But are they to be believed? Especially given the highly opinionated nature of the story telling 😉 I hope to post some snippets in the coming weeks. The project is set to go live in September.

Here’s a short feature about the project in today’s Observer Tech Monthly…

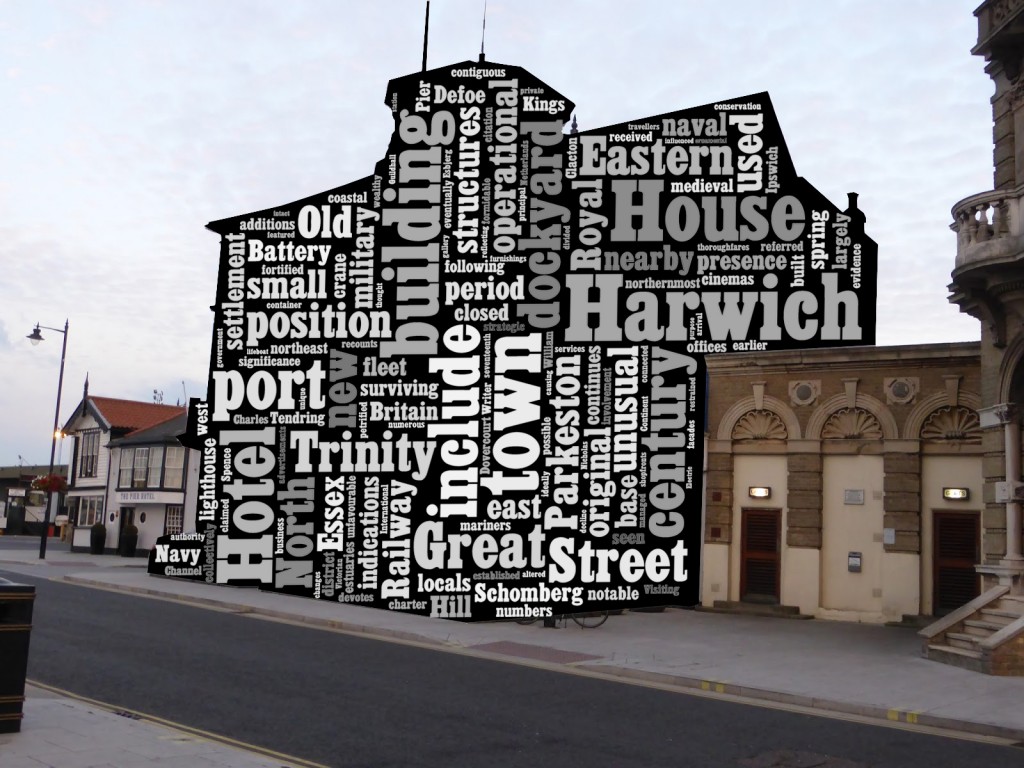

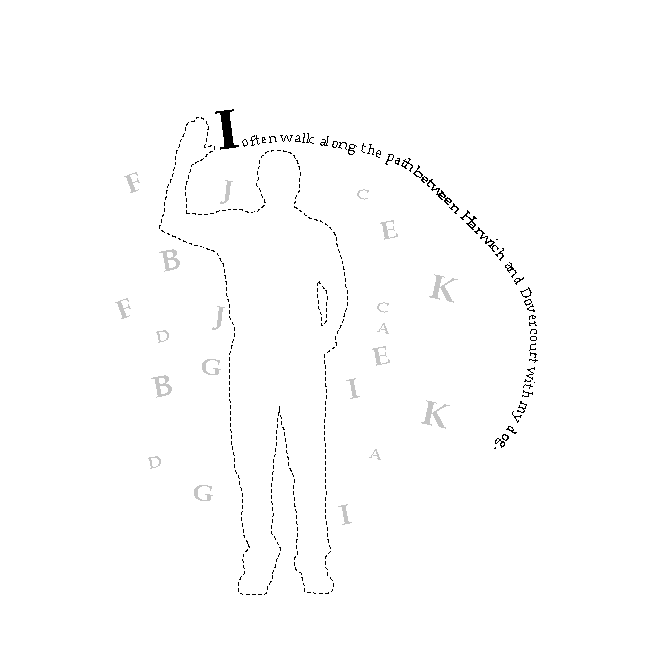

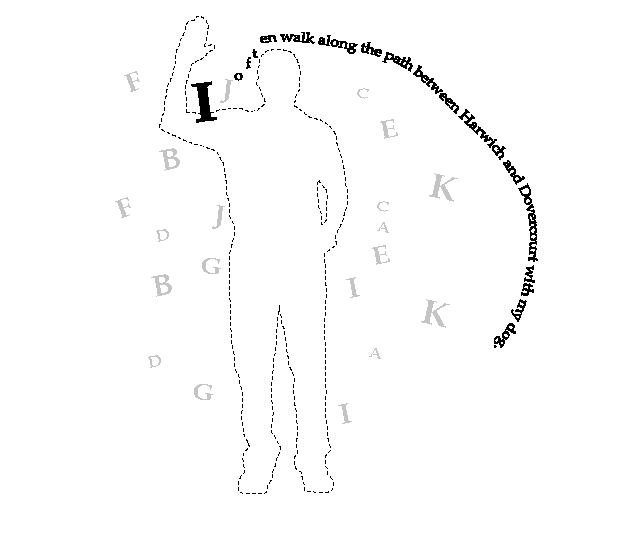

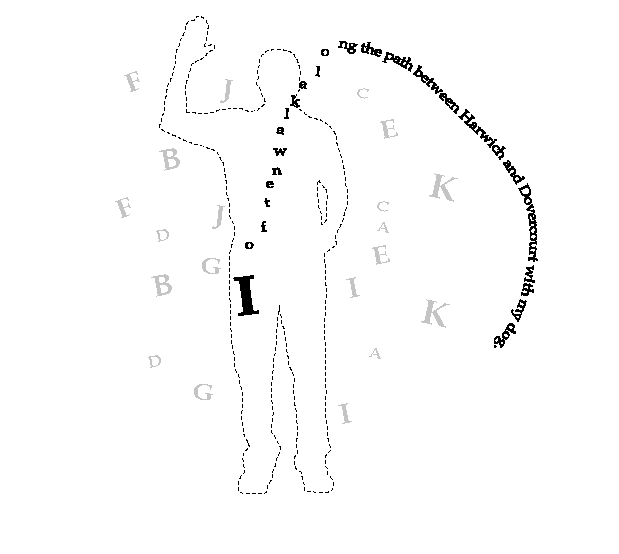

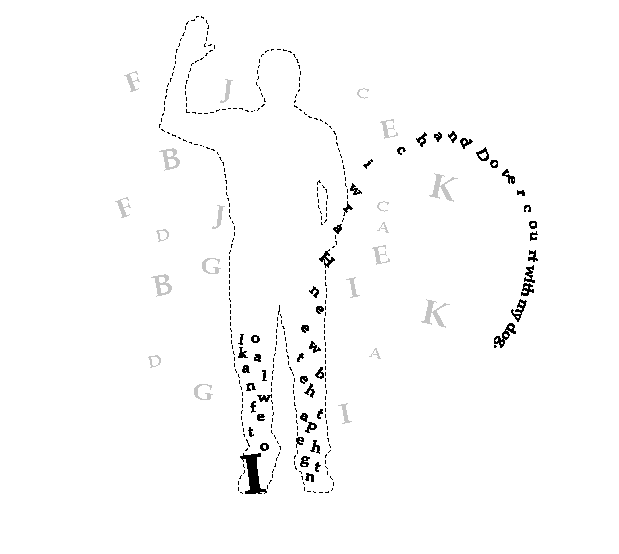

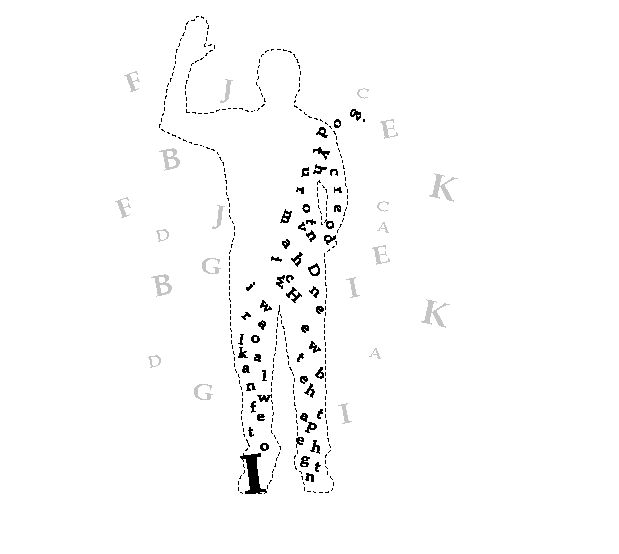

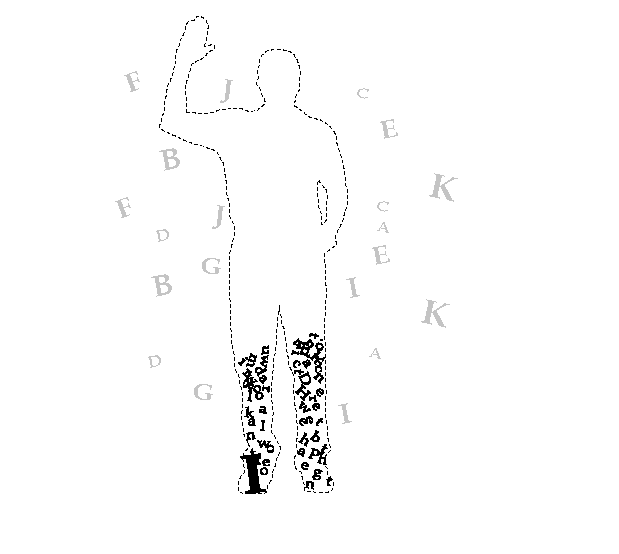

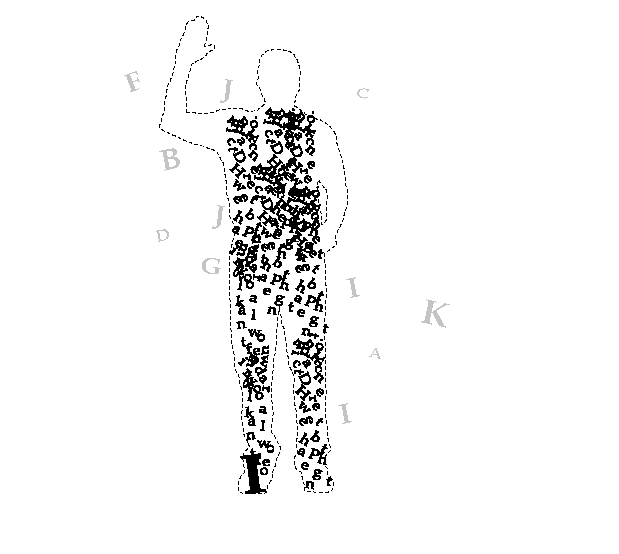

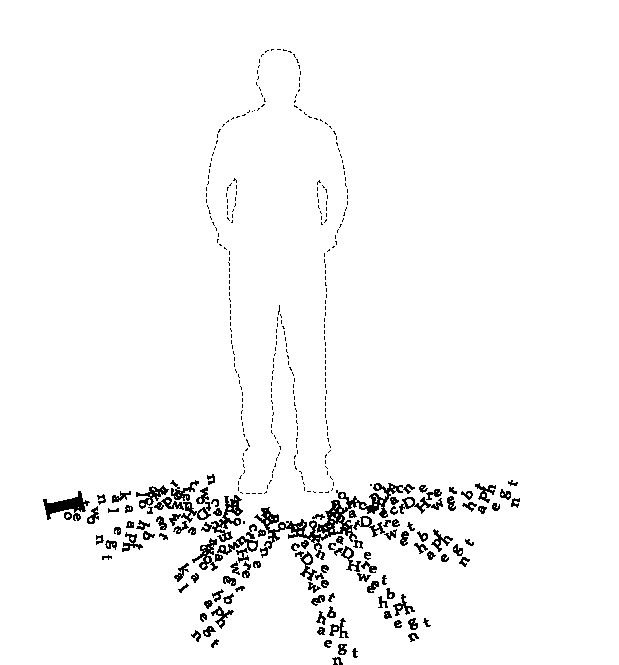

Journey Words Taking Shape

Harwich Concept Development

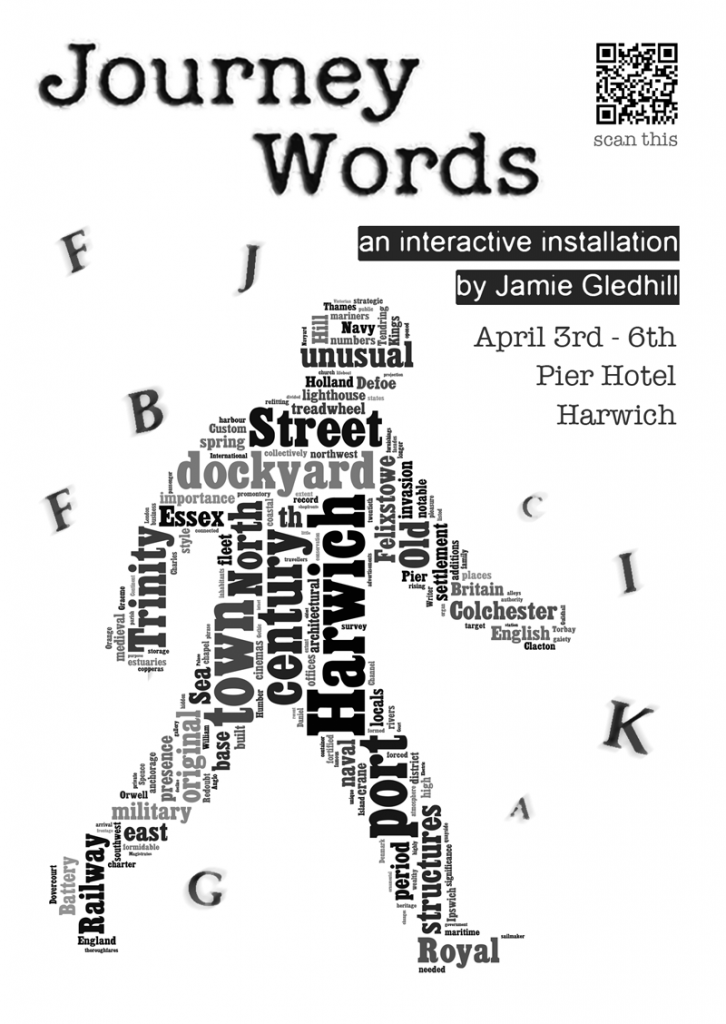

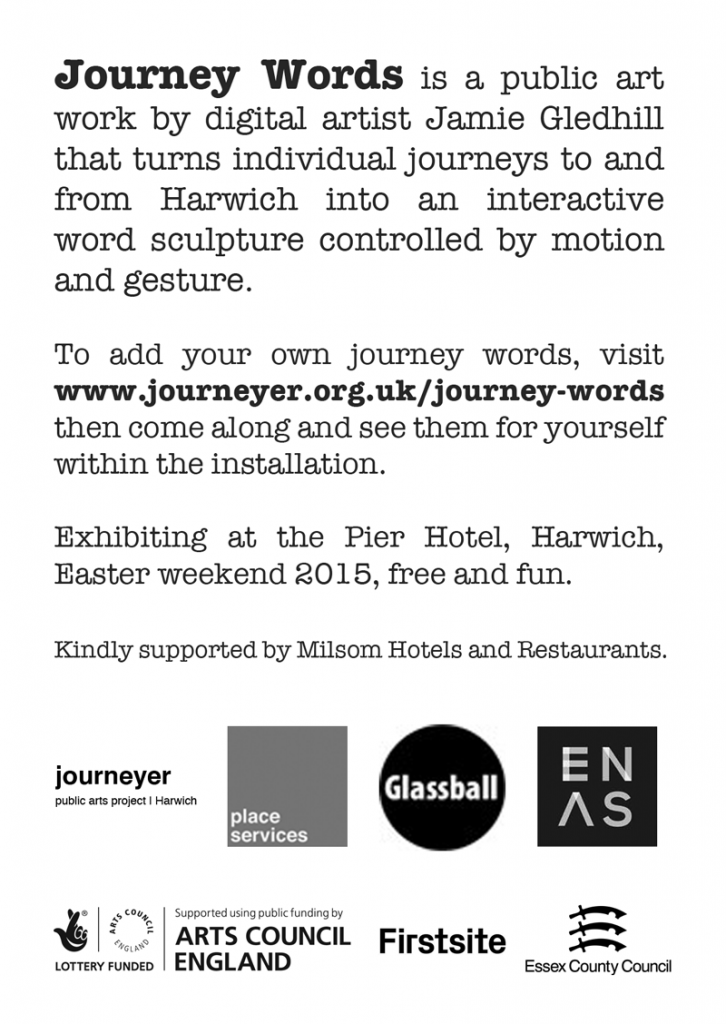

Harwich Commission

I’m happy to say that I’ve been commissioned by Place Services at Essex County Council and ENAS (Essex Network of Artists’ Studios) to produce an ‘experience based activity’ to occur in Harwich within the coming months. This is an exciting opportunity to follow on in the footsteps of Journeyer although mine will be a relatively small footstep in the wake of this larger project!

I’ll be adding some notes and thoughts to this blog as the project progresses. In the meantime, here is a nice Wordle created using text from the Wikipedia entry for Harwich.

Data Flow at FACT

Data Flow is now up and running at the Foundation for Art and Creative Technology (FACT), Liverpool, as part of the Type Motion exhibiton which opens tomorrow evening (13/11/14) and doesn’t stop until 08/02/15. Just as I threw the switch to demonstrate the installed work to FACT people, after some time spent tweaking, 50+ students turned up directly in front of the screens and immediately started jumping around and taking pictures. That was a good start! Here’s a short screen capture of the piece being put through its paces by a combination of FACT staff and passers-by.

6 Days in Liverpool

I was up in Liverpool again recently for a 6 day residency as part of the art tech, boundary pushing Syndrome project curated and produced by Nathan Jones.

‘Choros’ was a collaboration between myself and sound artist Stefan Kazassoglou of Kinicho. Artist and poet, Steven Fowler gave a martial-arts derived performance for the opening event. The venue was 24 Kitchen St which is a fascinating project in its own right – a constantly evolving arts-centric space at the very beating heart of Liverpool’s Baltic Quarter, full of positiveness and an admirable can-do attitude.

Inspired by a visit to the venue and at all times spurred on by Nathan Jones, Stefan Kazassoglou and myself set ourselves the brief of creating a ‘roomstrument’ – an interactive space capable of responding visually and sonically to physical presence and movement, similar to the way in which a musical instrument responds to the physicality of being played. The roomstrument should be ‘playable’ by an individual or by a group. It would be an experimental piece designed to make performers of participants by stimulating and rewarding performative play. The name Choros came about as an anglicisation of the Greek word ‘Koros’ which happens to mean both dance and room – the synonymity appealed to us.

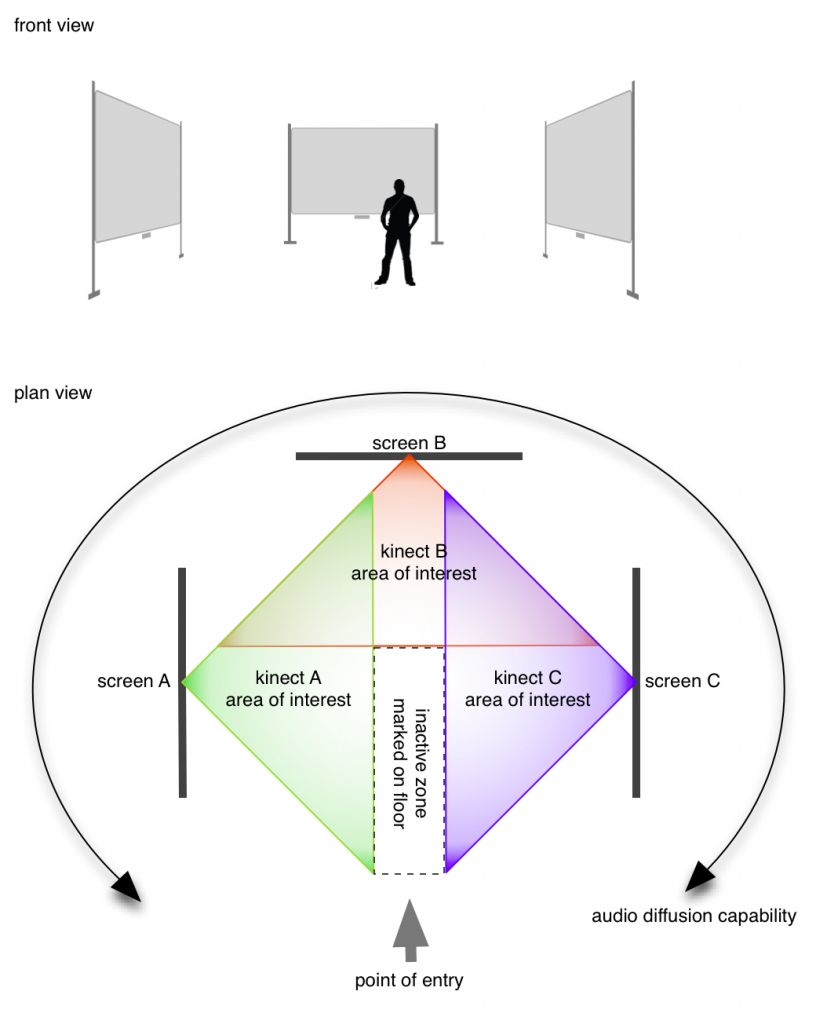

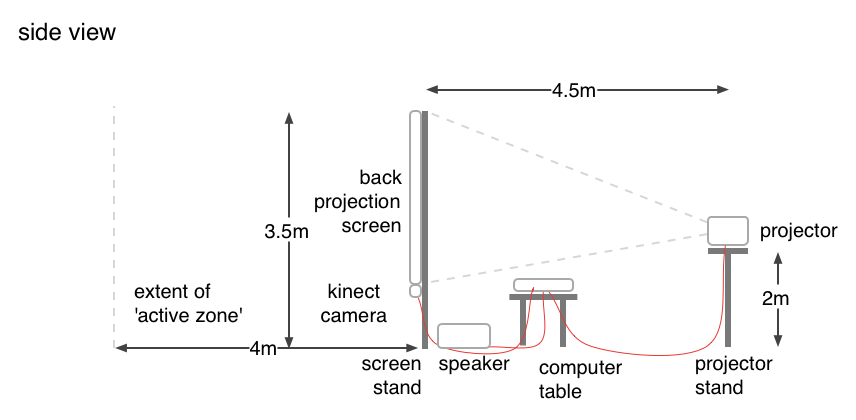

With the benefit of continued encouragement and support from Syndrome, we specified 3 projectors, 3 screens, 3 Kinect cameras and an 8 speaker ambisonic sound system to create a kind of open sided cube that would form the basis of an interactive space. In the end this relatively complex rig was constructed with a minimum of fuss by the Syndrome team and suppliers and we found ourselves facing a technically exacting set-up with plenty of calibrated communication required between video and audio processing. After the inevitable teething issues, we kickstarted our creative workflow and got a basic interactive audiovisual framework up and running on day 4 of the residency which only gave us 1 day to tweak before the incredible opening performance featuring Steven Fowler. The following day we opened up the work to the wider public…

At the centre of the installation was a lit ‘hotspot’ which we invited visitors to use a starting point to explore the interactive space. By reaching out towards or approaching any of the 3 screens from this hotspot, a series of ‘control cubes’ was revealed, each of which triggered a sound in respective ambisonic audio space. Thus a control cube in the bottom left part of a screen triggered an audio element spatially placed at the corresponding location. For the opening performance we used a series of Japanese martial arts-inspired vocal samples recorded by Steven Fowler himself.

Subsequent sound sets featured cello samples specially recorded by Stefan Kazassoglou and vocal samples from 20th Century philosopher and mathematician Alfred Korzybski discussing how abstraction creates an illusion of reality.

Moving around quickly inside the space triggered a rapid sucession of audio elements, resulting in a dramatic if slightly overwhelming mix of near-simultaneous sounds issuing from various locations in 3D space. Conversely, moving more carefully and identifying the points at which audio elements triggered gave visitors the chance to learn how to ‘play’ the work more as an actual ‘roomstrument’.

This experimental piece was a great success judging by the feedback and comments from visitors. As an artistic collaboration, I am sure it has inspired each one of us involved both at an individual and collaborative level. Certainly Stefan Kazassoglou and myself are keen to develop the ideas and techniques at the heart of Choros…

Massive thanks to all who helped and supported in spirit or in person.

The after-glow of Luminescence

Luminescence was installed at firstsite for 3 days in April. Due to the crescendo of effort required to pull the project together followed soon after by Easter holidays and the beginning of a new term, it has take me a little while to get round to writing up my thoughts and observations.

Not long after I wrote the last post discussing my previous show Electricus, I finally got hold of a Kinect sensor. Having worked exclusively with webcam video input for the last year or more, it was a good time to cross the line into the world of the depth image and positional information. I’m particularly happy that I really ‘maxed out’ the webcam and learned so much from operating within the constraints of rgb video over an extended period before coming in from the cold. The way I see it there are two main directions to go with the (version 1) Kinect – skeleton tracking which provides fine control for a restricted number of participants or exploitation of the depth image which just gives z information in a grainy black and white low-res feed. The first approach sounds way more groovy right? But the second approach is to my mind essentially more open in the sense that it can be used to set up multi-participant interactivity with the minimum of calibration or initialisation. I really like the idea of an ‘uninvigilated’ interactive space as opposed to the invigilated version in which perhaps only 2 are allowed to approach the sensor at a time. But of course, it’s all a matter of fitness for purpose and I’m sure I’ll be maxing out the skeleton tracking functionality before long!

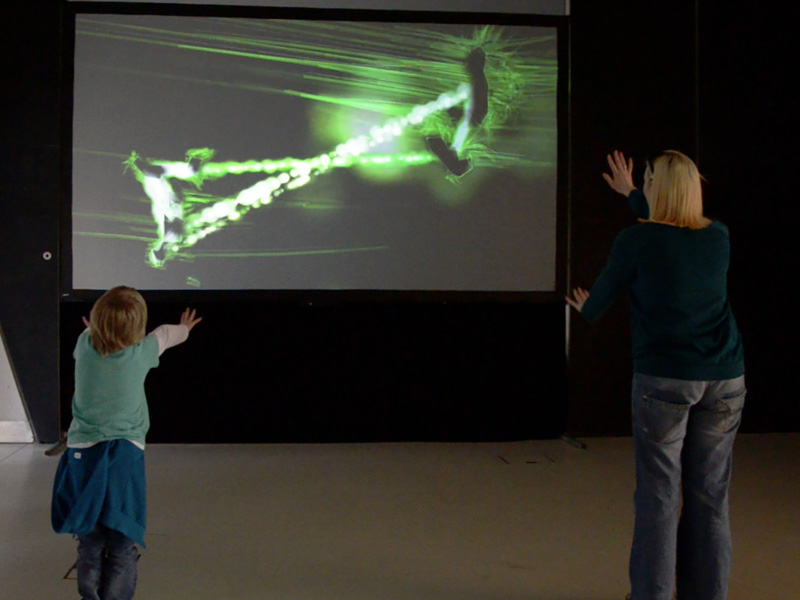

So what of the depth image? For a start, using a threshold-type filter it is simple to set up an active zone in front of the sensor thereby knocking out the background or any other unwanted objects. For Luminescence, I developed functionality that locates objects/people and draws lines around them in an approximation of their silhouette. Because the lines are drawn using a cluster of points located on the edge of a person/object’s shape, it is straight forward enough to calculate the average position of a cluster which roughly equates to the centre of a drawn shape. Once more than one shape occurs, the piece joins them together dramatically. So an individual might dwell at the edge of the active zone and experiment by putting only parts of the body (eg hands, face) into the active zone and seeing how these all connect up. A group of participants might just jump around in front of the screen and watch how the connections between their respective body shapes light the screen up.

Visitor response was very positive. I was in the installation space or close by for the duration of the event at firstsite which gave me ample opportunity to observe, chat with and generally appreciate participant interaction with the piece.