After engaging in a period of early ideation, and thinking about the application of gestural interaction within an information discovery experience, I had a look round a couple of museums to take stock of existing interaction design in context. Of particular note was the Museum of London, visited on the 11th of July 2019, which has a large number of interactive displays incorporating touch. I was particularly interested in the touch experience about disease in London, which had some game-like mechanics. It was well designed, with a bespoke screen and appealing interactive elements, however, for one reason or another it was not working properly. Users were becoming frustrated with the lack of responsiveness to touch, which may have been the result of a dirty or misaligned sensor. On my journey back to Norwich, I reflected upon the maturity and ubiquity of touch screen design for museums. This led me to consider gestural interaction though motion tracking as an emergent form of interaction design, specifically the LEAP Motion Controller, or LMC.

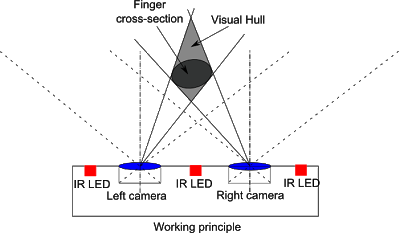

This is a small USB device designed to face upwards on a desktop or outwards on the front of a VR headset. It makes use of two infrared cameras and three infrared LEDs to track hand position and movement in 3D space

From a technical perspective, the LMC is relatively mature with several iterations of drivers and accompanying API documentation. One point of note is that since the Orion release in February 2016, the LMC only has vendor-supported API libraries for game development environments Unity and Unreal Engine, meaning that JavaScript and therefore HTML5 as a platform is not natively supported. Neither are ‘gestures’ – the LMC versions of 2D gestures commonly used in conjunction with hand held touch screen devices e.g. swipe. The emphasis is now on the use of LMC, which is by nature a 3D-aware controller, in 3D space with VR as the principle target platform. Despite this repositioning of the LMC, thanks to a well-documented API and active community, there are many experimental applications of the technology outside of games and VR.

A useful primer on the topic of LMC within the context of 3D HCI is the review by Bachmann, Weichert & Rinkenauer (2018) which notes

- the use of touchless interaction in medical fields for rehabilitation purposes

- the suitability of LMC for use in games and gamification

- the use by children with motor disabilities

- textual character recognition (‘air-writing’)

- sign language recognition

- as a controller for musical performance and composition.

A common concern is the lack of haptic feedback offered by the LMC: “the lack of hardware-based physical feedback when interacting with the Leap Motion … results in a different affordance that has to be considered in future physics-based game design using mid-air gestures.” Moser C., & Tscheligi M., (2015). Seeing as LEAP has recently (May 2019) been acquired by Ultrahaptics, a specialist in the creation of the sensation of touch in mid-air, this situation is likely to change.

About the design of intuitive 3D gesture for differing contexts, Cabreira & Hwang (2016) note that differing gestures are reportedly easier to learn for older or younger users, that visual feedback is particularly important so that the user knows when their hand is being successfully tracked and that clear instruction is imperative to assist learning.

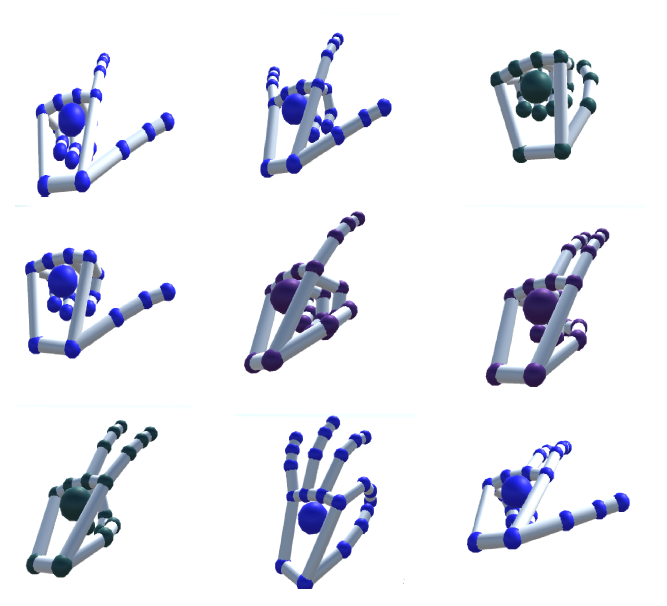

Shao (2015) provides a comprehensive technically oriented introduction to the LMC, including a catalogue of gesture types and associated programmatic techniques.

Shao also notes the problem of self-occlusion, where one part of the user’s hand obscures another part, resulting in misinterpretation of hand position by the LMC. (2015).

In Bachmann, Weichert, & Rinkenauer (2015), the authors use Fitts’ law to compare the efficiency of using the LMC as a pointing device vs. using a mouse. The LMC comes out worse in this context, exhibiting an error rate of 7.8% vs 2.8% for the mouse. Reflecting upon this issue led me to a report concerning the use of expanding interaction targets (McGuffin & Balakrishnan, 2005) and the general idea of making an interaction easier to achieve by temporarily manipulating the size of the target. In fact, an approach I have subsequently adopted is to make a pointing gesture select the nearest valid target, akin to gaze interaction in VR where the viewing direction of the headset is always known and can be used to manage interaction. In VR, this is often signified by an interactive item changing colour when intersected by a crosshair or target rendered in the middle of the user’s field of vision.

References

Bachmann, D., Weichert, F. & Rinkenauer, G. (2015) Evaluation of the Leap Motion Controller as a New Contact-Free Pointing Device. Sensors 2015, 15, 214-233..

Bachmann, D., Weichert, F. & Rinkenauer, G. (2018) Review of Three-Dimensional Human-Computer Interaction with Focus on the Leap Motion Controller. Sensors 2018, 18, 2194.

Cabreira, A., and Hwang, F. (2016) How Do Novice Older Users Evaluate and Perform Mid-Air Gesture Interaction for the First Time? In Proceedings of the 9th Nordic Conference on Human-Computer Interaction (NordiCHI ’16).

McGuffin, M. & Balakrishnan, R., (2005) Fitts’ Law and Expanding Targets: Experimental Studies and Designs for User Interfaces. ACM Transactions on Computer-Human Interaction, Vol. 12, No. 4.

Moser C., & Tscheligi M., (2015) Physics-based gaming: exploring touch vs. mid-air gesture input. IDC 2015.

Shao, L. (2016) Hand movement and gesture recognition using Leap Motion Controller. Stanford EE 267, Virtual Reality,Course Report [online] available at https://stanford.edu/class/ee267/Spring2016/report_lin.pdf (accessed 29/8/19)